Hyperspectral and Multispectral Imaging

Hyperspectral and multispectral imaging are two similar technologies that have been growing in prominence and utility over the past two decades. The terms are often conflated to have the same meaning, but represent two distinct imaging methods, each with their own application spaces. Both technologies have advantages over conventional machine vision imaging methods, which utilize light from the visible spectrum (400-700nm). However, these benefits come with an increased system complexity in terms of lighting, filtering, and optical design.

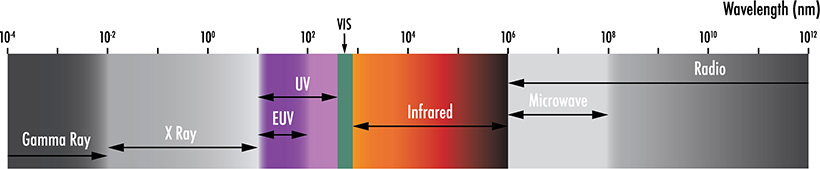

In typical machine vision applications, illumination used and captured by the sensor is in the visible spectrum. This part of the spectrum consists of the only light that the human eye can detect, ranging from roughly 400nm (violet) to 700nm (dark red) (Figure 1). Imaging lens assemblies and sensors typically have peak spectral sensitivities around 550nm. The quantum efficiency of a camera sensor is the ability to convert photons into an electric signal; this efficiency decreases significantly into the ultraviolet or the near infrared. In the simplest terms, hyperspectral imaging (HSI) is a method for capturing images that contain information from a broader portion of the electromagnetic spectrum. This portion can start with UV light, extend through the visible spectrum, and end in the near or short-wave infrared. This extended wavelength range can reveal properties of material composition that are not otherwise apparent.

Figure 1: Only a small portion of the wavelength spectrum is visible to the human eye, and wavelength regions outside of the visible spectrum, are utilized in hyperspectral and multispectral imaging.

Machine vision sensors output arrays of grayscale values resulting in a 2D image of the object within a viewing area. The functional utility of this is generally feature recognition for the purposes of sorting, measuring, or locating objects. The vision system is unaware of the wavelengths that are being used for illumination unless optical filters areused. This is not true for sensors that have a Bayer Pattern (RGB) filter, but even then, each pixel is restricted to accepting light from a narrow band of wavelengths and the camera software is what ultimately assigns color. In a truly hyperspectral image, each pixel corresponds to coordinates, signal intensity, and wavelength information. For this reason, HSI is often referred to as imaging spectroscopy.1

As a quick aside, a spectrometer collects wavelength information as well as relative intensity information for the different wavelengths detected.2 These devices typically collect light from a singular source or location on a sample. A spectrometer can be used to detect substances that scatter and reflect specific wavelengths, or material composition based on fluorescent or phosphorescent emissions. An HSI system takes this technology to the next level by assigning positional data to the collected light spectrums. A hyperspectral system does not output a 2D image, but instead a hyperspectral data cube or image cube.3

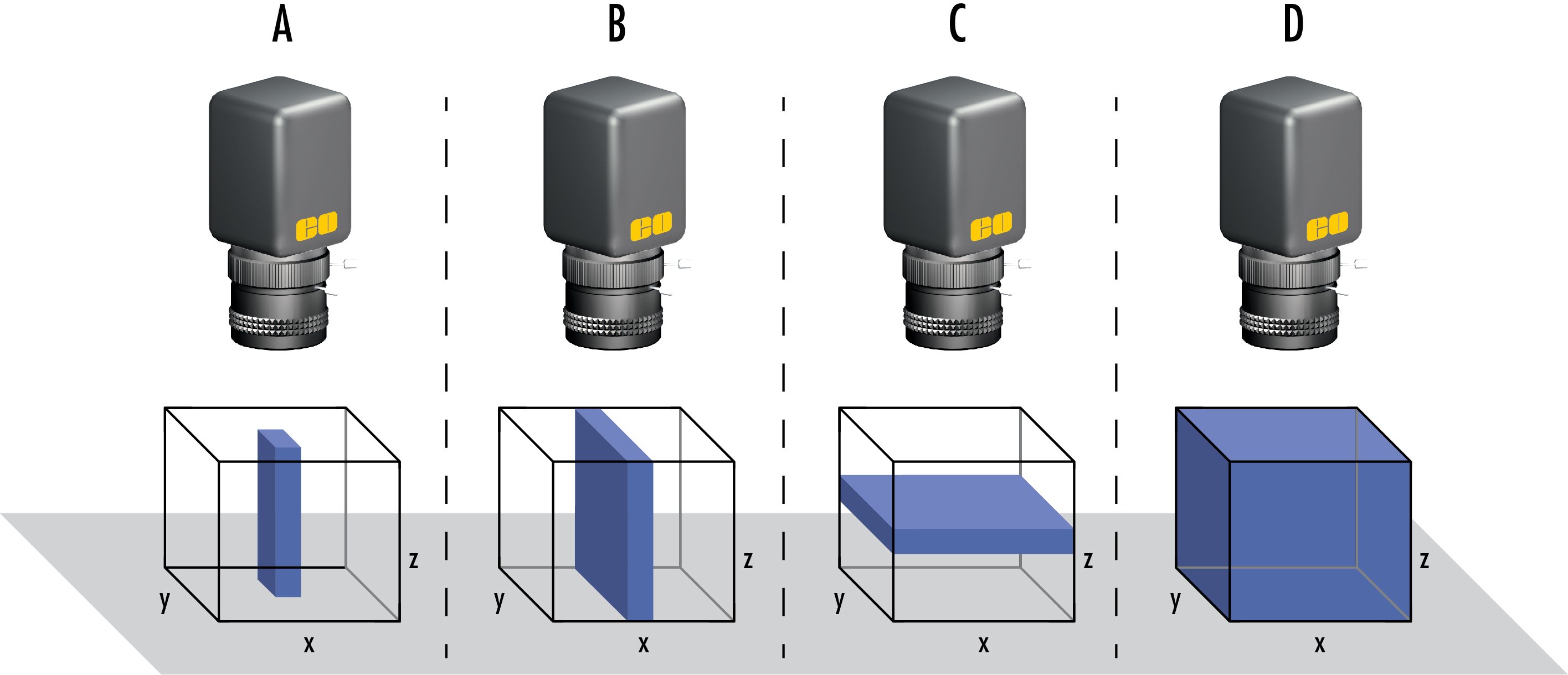

There are four primary hyperspectral acquisition modes used, each with a set of advantages and disadvantages (Figure 2). The whiskbroom method is a point scanning process that acquires the spectral information for one spatial coordinate at a time. This method tends to offer the highest level of spectral resolution, but requires the system to scan the target area on both the x and y axes, significantly adding to the total acquisition time.1 The pushbroom method is a line scanning data capture in which a single axis of spatial movement is required as a row of pixels scans over an area to capture the spectral and positional information. These pushbroom systems can have “compact size, low weight, simpler operation and higher signal to noise ratio.”1 When utilizing this HSI method, it is critical to time the exposures just right. Incorrect exposure timing will introduce inconsistent saturation or an underexposure of spectral bands. The method called plane scanning images the entire 2D area at once, but at each wavelength interval and involves numerous image captures to create the spectral depth of the hyperspectral data cube. While this capture method does not require translation of the sensor or full system, it is critical that the subject is not moving during acquisition; the accuracy of positional and spectral information will be compromised otherwise. The fourth and most recently developed mode of hyperspectral image acquisition is referred to as single shot or snapshot. A single shot imager collects the entirety of the hyperspectral data cube within a singe integration period.1 Although single shot appears to be the preferred future of HSI implementation, it is currently limited by comparatively lower spatial resolution and requires further development.1

Figure 2: The four primary hyperspectral acquisition modes including (A) point scanning, or whiskbroom mode, (B) line scanning, or pushbroom mode, (C) plane scanning, or area scanning mode, and (D) single shot mode.

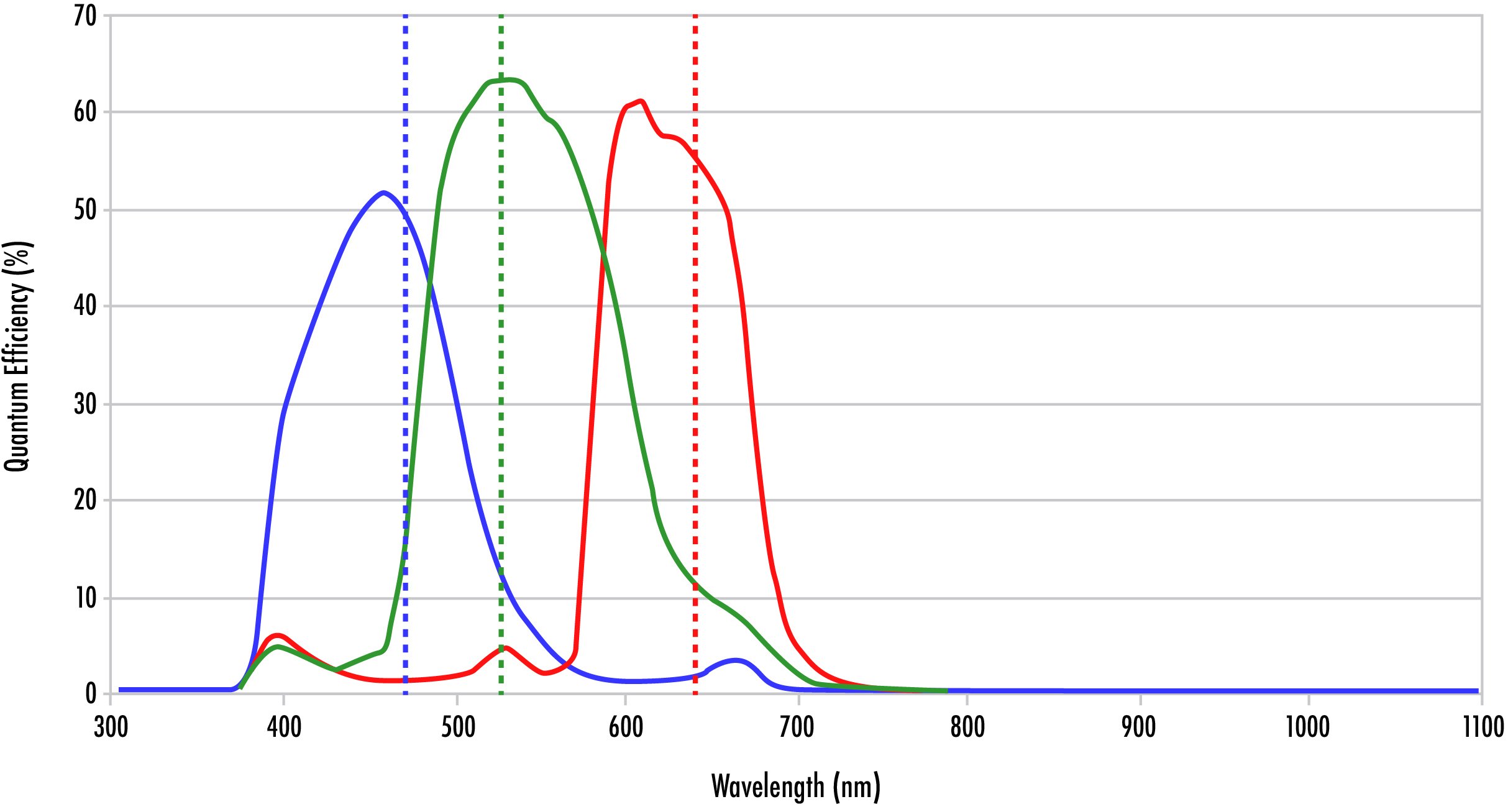

A multispectral imaging (MSI) system is similar to one that is hyperspectral but does have key differences. In comparison to the effectively continuous wavelength data collection of an HSI, an MSI concentrates on several preselected wavebands based on the application at hand. While not a direct example or comparison, common RGB sensors help illustrate this concept. RGB sensors are overlaid with a Bayer pattern, consisting of red, green, and blue filters. These filters allow wavelengths from specific color bands to be absorbed by the pixels while the rest of the light is attenuated. The bandpass filters have transmission bands in the range of 400-700nm and have slight spectral overlap. An example of this can be seen in Figure 3. The images captured are then rendered with false color to approximate what the human eye sees. In most multispectral imaging applications, the wavelength bands are significantly narrower and more numerous. The wavebands are commonly on the order of tens of nanometers and are not exclusively a part of the visible spectrum. Depending on the application, UV, NIR, and thermal wavelengths (mid-wave IR) can have isolated channels as well.4

Figure 3: Quantum efficiency curve for an RGB Camera curve showing the overlap between red, green, and blue.

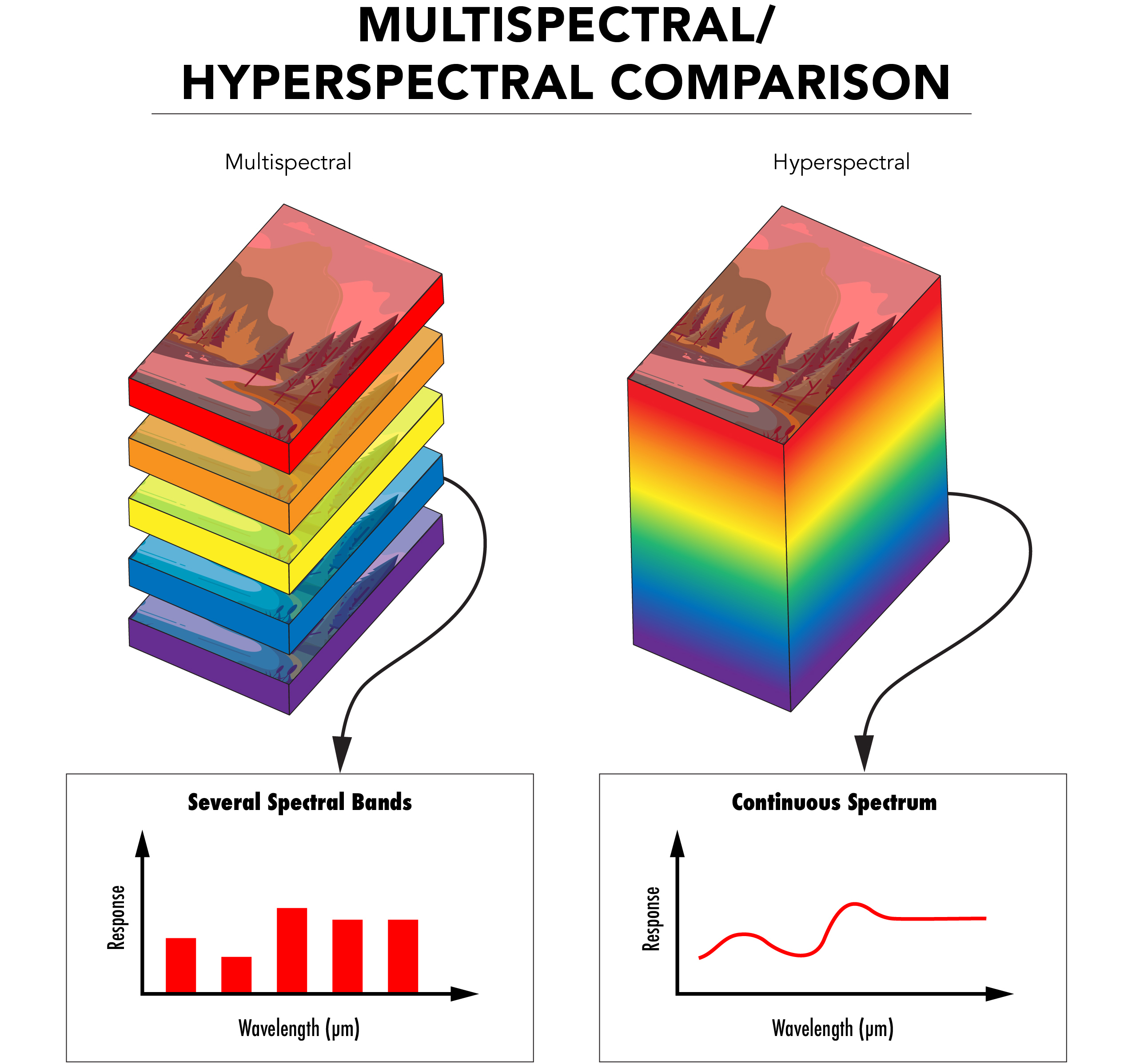

Some see MSI as a worse form of HSI, one with lower spectral resolution. In truth, the two technologies each present their own advantages that make them a preferred tool for different tasks. HSI is best suited for applications sensitive to subtle differences in signal along a continuous spectrum. These small signals could be missed by a system which samples larger wavebands. However, some systems require significant portions of the electromagnetic spectrum to be blocked to selectively capture light (Figure 4). The other wavelengths could present significant noise that would potentially ruin measurements and observations. Also, if there is less spectral information included in the data cube, the image capture, processing, and analysis can happen more quickly.

Figure 4: Comparison of the image stacks in multispectral imaging, in which there are images taken in several different spectra, and hyperspectral imaging, in which there are images taken in many different spectra.

The application spaces that require the uses of HSI and MSI continue to grow in number. Remote sensing, aerial imaging of the earth’s surface with the use of unmanned aerial vehicles (UAVs) and satellites, has relied on both HSI and MSI for decades. Spectral photography can penetrate through Earth’s atmosphere and different cloud cover for an unobscured view of the ground below. This technology can be used to monitor changes in population, observe geological transformations, and study archeological sites. In addition, HSI and MSI technologies have become increasingly critical in the study of the environment. Data can be collected about deforestation, ecosystem degradation, carbon recycling, and increasingly erratic weather patterns. Researchers use the information gathered to create predictive models of the global ecology, which drives many environmental initiatives meant to combat the negative effects of climate change and human influence on nature.6

The same is true in the medical field. Non-invasive scans of skin to detect diseased or malignant cells can now be performed by doctors with the help of hyperspectral imaging. Certain wavelengths are better suited for penetrating deeper into the skin, allowing a more detailed understanding of a patient’s condition. Cancers and other diseased cells are now easily distinguishable from healthy tissue, as they will fluoresce and absorb light under the correct stimulation. Doctors are no longer required to make educated guesses based on what they can see and a patient’s description of symptoms. Sophisticated systems can record and automatically interpret the spectral data, leading to significantly expedited diagnoses and rapid treatment of the exact areas of need.5

Life sciences and remote sensing are just a few topics in which these technologies have made a large footprint. More specific market areas include agriculture, food quality and safety, pharmaceuticals, and healthcare.3 Farmers find these tools particularly useful, allowing them to determine the growth of their crops. Tractors and drones can be equipped with spectral imagers to scan over fields while doing a form of lower altitude remote sensing. The farmers then analyze spectral characteristics of the captured images. These characteristics help determine the general health of the plants, the state of the soil, regions that have been treated with certain chemicals, or if something harmful, like an infection, is present. All the information has unique spectral markers that can be captured, analyzed, and used to ensure the optimal production of produce.

Although application spaces that benefit from HSI and MSI are large and increasing, limitations in the current technology have led to slow industry adoption. Currently, these systems are significantly more expensive compared to other machine vision components. The sensors need to be more complex, have broader spectral sensitivity, and must be precisely calibrated. Sensor chips will often require the use of substrates other than silicon, which is only sensitive from approximately 200-1000nm. Indium arsenide (InAs), gallium arsenide (GaAs), or indium gallium arsenide (InGaAs), can be used to collect light up to 2600nm. If the requirement is to image from the NIR through the MWIR, a mercury cadmium tellurium (MCT or HgCdTe) sensor, indium antimonide (InSb) focal plane array, indium gallium arsenide (InGaAs) focal plane array, microbolometer, or other longer wavelength sensor is required. The sensors and pixels used in these systems will also need to be larger than many machine vision sensors to attain the required sensitivity and spatial resolution.1

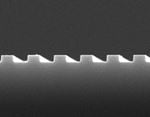

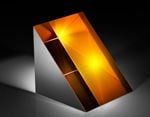

Another challenge occurs when pairing these high-end sensors with the proper optical components. Spectral data recording heavily relies on bandpass filters, diffractive optics, such as prisms or gratings, and even liquid crystal or acousto-optic tunable filters to separate the light of differing wavelengths.7 Additionally, the lenses used for these cameras must be optimally designed and compatible across vast wavelength ranges and temperature fluctuations. These designs must have more optical elements, which increases cost and system weight. Elements will need to have different refractive indices and dispersive properties for broadband color correction. Differing glass types lead to varied thermal and mechanical properties as well. After selecting glasses that have the appropriate internal transmission spectra, it is imperative to apply broadband multi-layer anti-reflection coatings to each lens to ensure maximum light throughput. The multitude of unique requirements in these circumstances makes the design process of lenses for hyperspectral and multispectral imaging tedious and requires great skill. Certain application spaces also necessitate that the lens assemblies are athermal to ensure that a system will function the same whether used on the ground or in the upper atmosphere.

Future development goals are to make HSI and MSI systems more compact, affordable, and user friendly. With these improvements, new markets will be encouraged to utilize the technology, and advance the markets that already do.

References

- Hyperspectral Imaging: A Review on UAV-Based Sensors, Data Processing and Applications for Agriculture and Forestry.

- D. W. Ball, Field Guide to Spectroscopy, SPIE Press, Bellingham, WA (2006).

- Imaging in Dermatology, 2016; Chapter 16 – Hyperspectral and Multispectral Imaging in Dermatology.

- R. Paschotta, article on 'multispectral imaging' in the Encyclopedia of Laser Physics and Technology, 1. edition October 2008, Wiley-VCH, ISBN 978-3-527-40828-3.

- Schneider, Armin, and Hubertus Feußner. Biomedical Engineering in Gastrointestinal Surgery. Academic Press, 2017.

- Unninayar, S., and L. Olsen. “Monitoring, Observations, and Remote Sensing – Global Dimensions.” Encyclopedia of Ecology, 2008, pp. 2425–2446., doi:10.1016/b978-008045405-4.00749-7.

- Schelkanova, I., et al. “Early Optical Diagnosis of Pressure Ulcers.” Biophotonics for Medical Applications, 2015, pp. 347–375., doi:10.1016/b978-0-85709-662-3.00013-0.

혹은 지사별 연락처 확인

견적 도구

재고번호 입력 후 바로 시작

Copyright 2023, 에드몬드 옵틱스 코리아 사업자 등록번호: 110-81-74657 | 대표이사: 앙텍하우 | 통신판매업 신고번호: 제 2022-서울마포-0965호, 서울특별시 마포구 월드컵북로 21, 7층 (서교동 풍성빌딩)